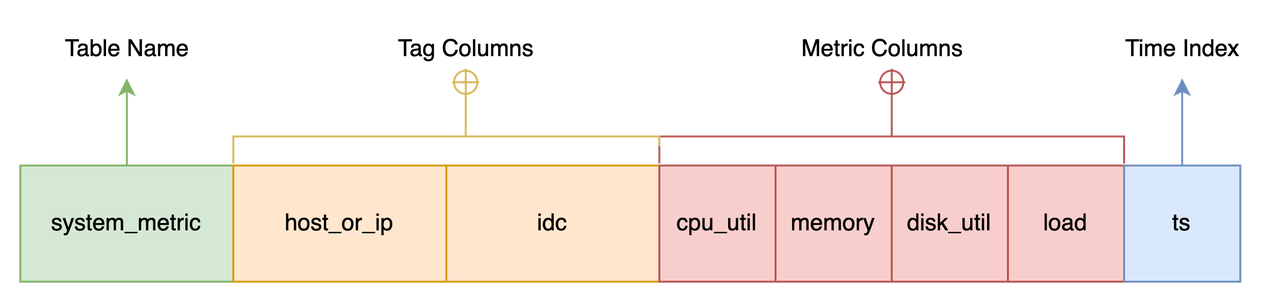

In our previous blog, it was mentioned that we designed GreptimeDB on top of the TimeSeries Table model. Users can either store data on local disks or in object storage provided by cloud service providers. These are all based on the capabilities provided by the storage engine.

When it comes to time series processing, people often write at high frequency, but with few deletions and relatively stationary query patterns(usually displayed by time ranges). So we would like to apply write-friendly LSM Tree, easily separating cold from hot, with TTL.

This blog briefly introduces the design concepts of the GreptimeDB storage engine, not diving too deep into the implementation details because the storage engine and the features mentioned are still under rapid development. We plan to deliver most of the features in our first release.

We'll walk you through the techniques in three parts. See the overall architecture and design concepts of the storage engine, then go on by explaining our smart indexes and adaptive compression functions.

Time Series Oriented LSM Tree

When designing the storage engine, we need to consider optimizing and customizing processing in time series scenarios

- Support smart indexing and adaptive compression

- Leverage the capabilities and strengths of cloud-native infrastructures

- Support non-cloud environment

- Extensibility

- Observability

- Store legacy data at a low cost

Although it sounds like a lot of work, fortunately, we don't need to develop a general purpose storage engine because, for a time series database, it's spared from complex online transactions. Regarding technology selection, aiming at time series scenarios, we are building on LSM Tree, which caters to high throughput ingestion, supports hot/cold data separation and TTL(time-to-live), and can be easily offloaded to object storage.

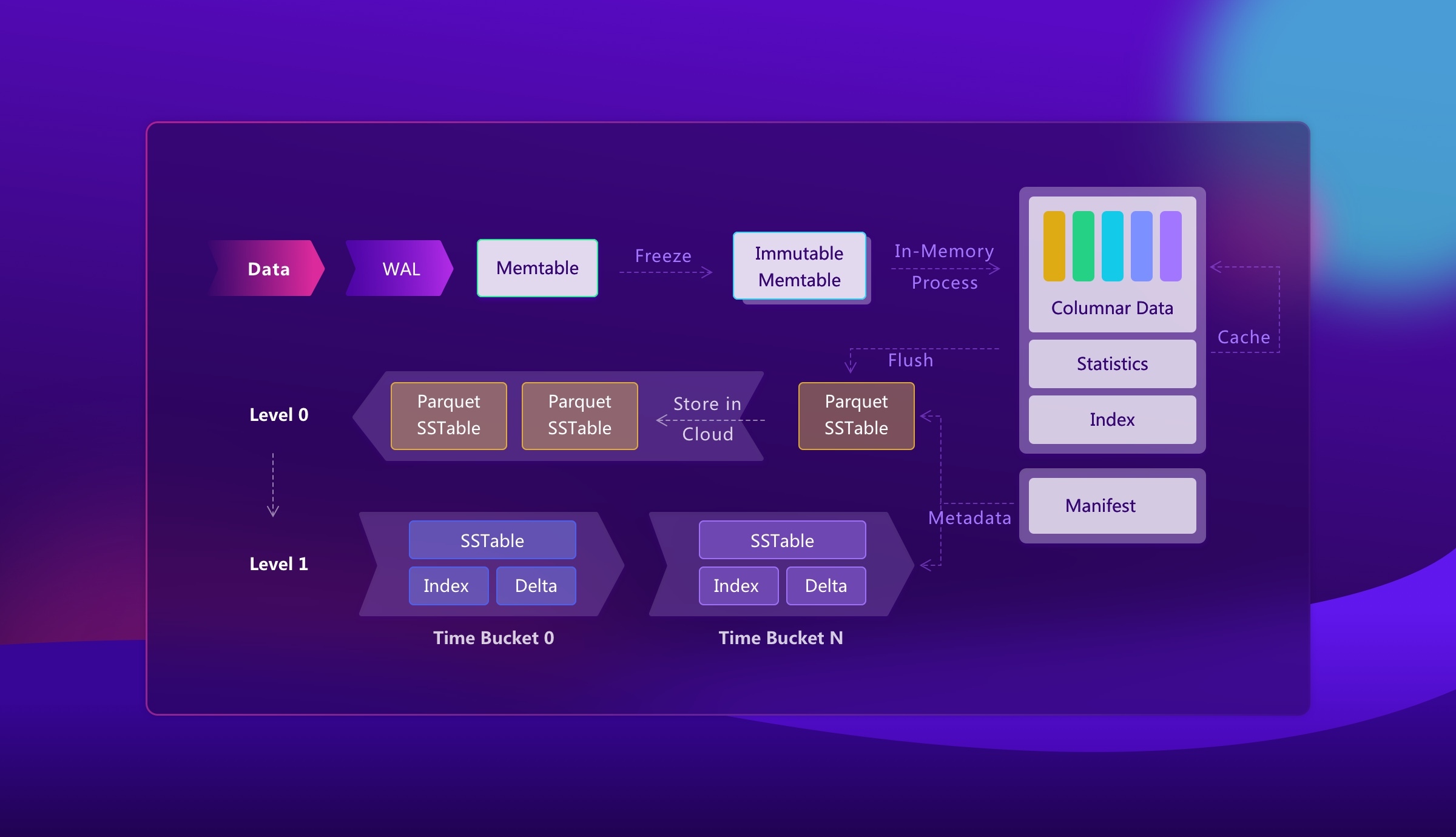

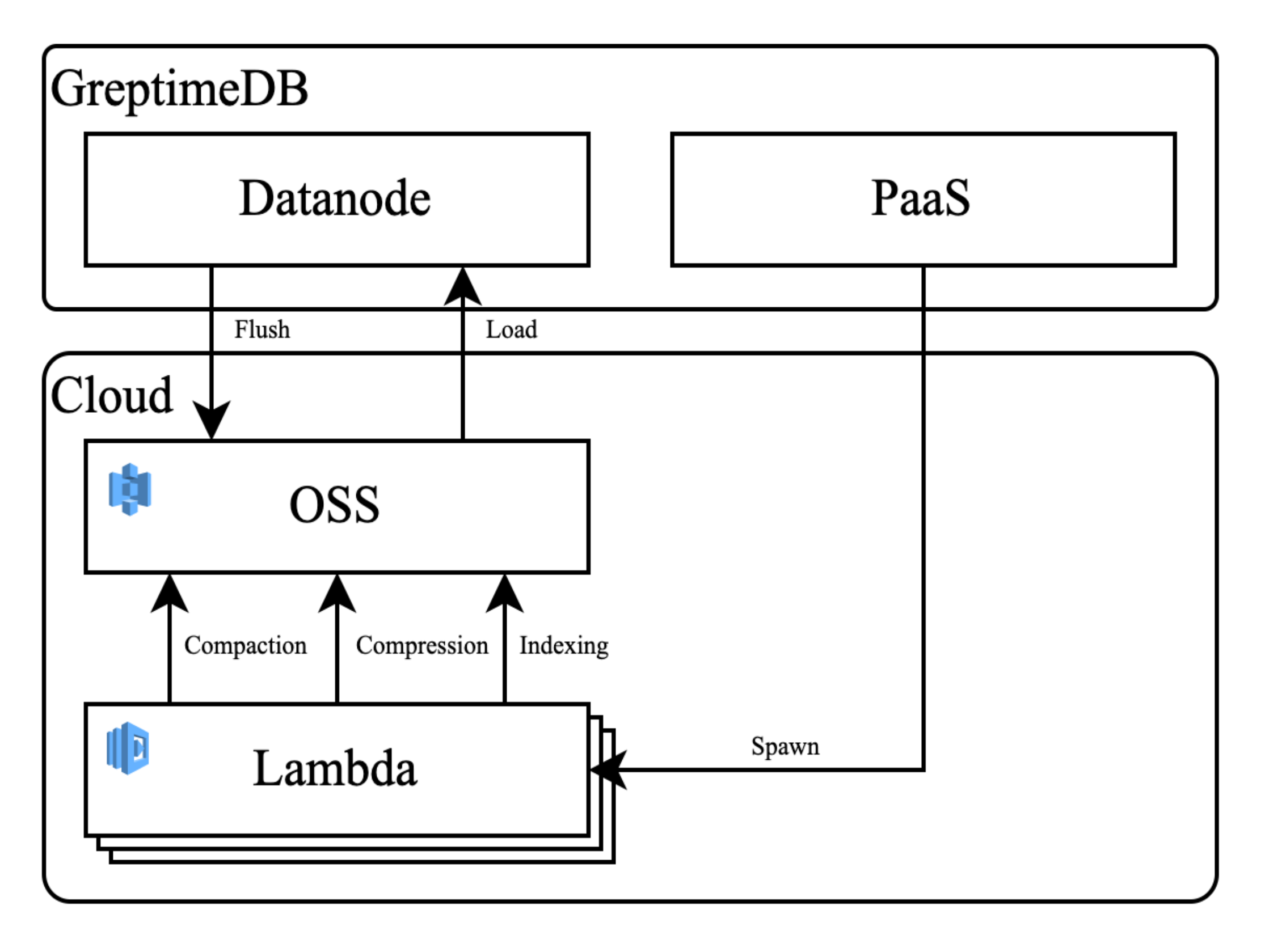

This picture shows the overall architecture of the storage engine:

As shown in the picture,

The data is written into the WAL and the memtable first, and then persisted to level 0 in the Parquet format when the data in the memtable reaches a certain threshold. These files can be stored in object storage.

- We will target the memtable to time-series scenarios, more friendly for reads and writes on one series.

Currently, we write the original user data directly to the Parquet files without processing, and meanwhile, we include some internal columns to store additional information.

- We introduced schema and columnar storage. The latter is convenient for compression and using mature formats like Parquet.

- It will further bring us more benefits to store in Parquet format when integrating with data platforms like Spark SQL.

We plan to implement Leveled Compaction to:

- Merge small files

- Partition files by time range for easy implementation of TTL (time-to-live) in an attempt to automatically select suitable time buckets for users in the future

- Build indexes for the files to improve query efficiency, which will be discussed in the second part of this article.

- Further compress the level 0 files to reduce disk usage, which will be covered in the third part of this blog

We are incorporating a distributed log storage named bunshin as the WAL of our storage engine to ensure data durability in the memtable.

To address all those ideas above, if we were to use the existing storage engine like RocksDB, it could be rather difficult and would induce unwanted complexity and troubles of maintenance. Instead, we are developing on our own, which easily entails fresh modifications and rapid iterations.

Smart Indexing

In analytical scenarios of time series, the most common query pattern is to locate the fitting series according to the given tags and time ranges. Traditional time series databases have two ways of fast searching from tags to time series:

Popular TSDBs, for example, InfluxDB use inverted indexes and will end up spilling memory to disk when the series number increases.

On the other hand, if we use MPP approach instead, every query request has to go through all the SST files, which brings up the IO overhead. Moreover, the overhead will deteriorate when the SST files are offloaded to object storage on our future cloud version.

GreptimeDB comes up with a mixed solution - build smart indexing and Massively Parallel Processing (MPP) to boost pruning and filtering.

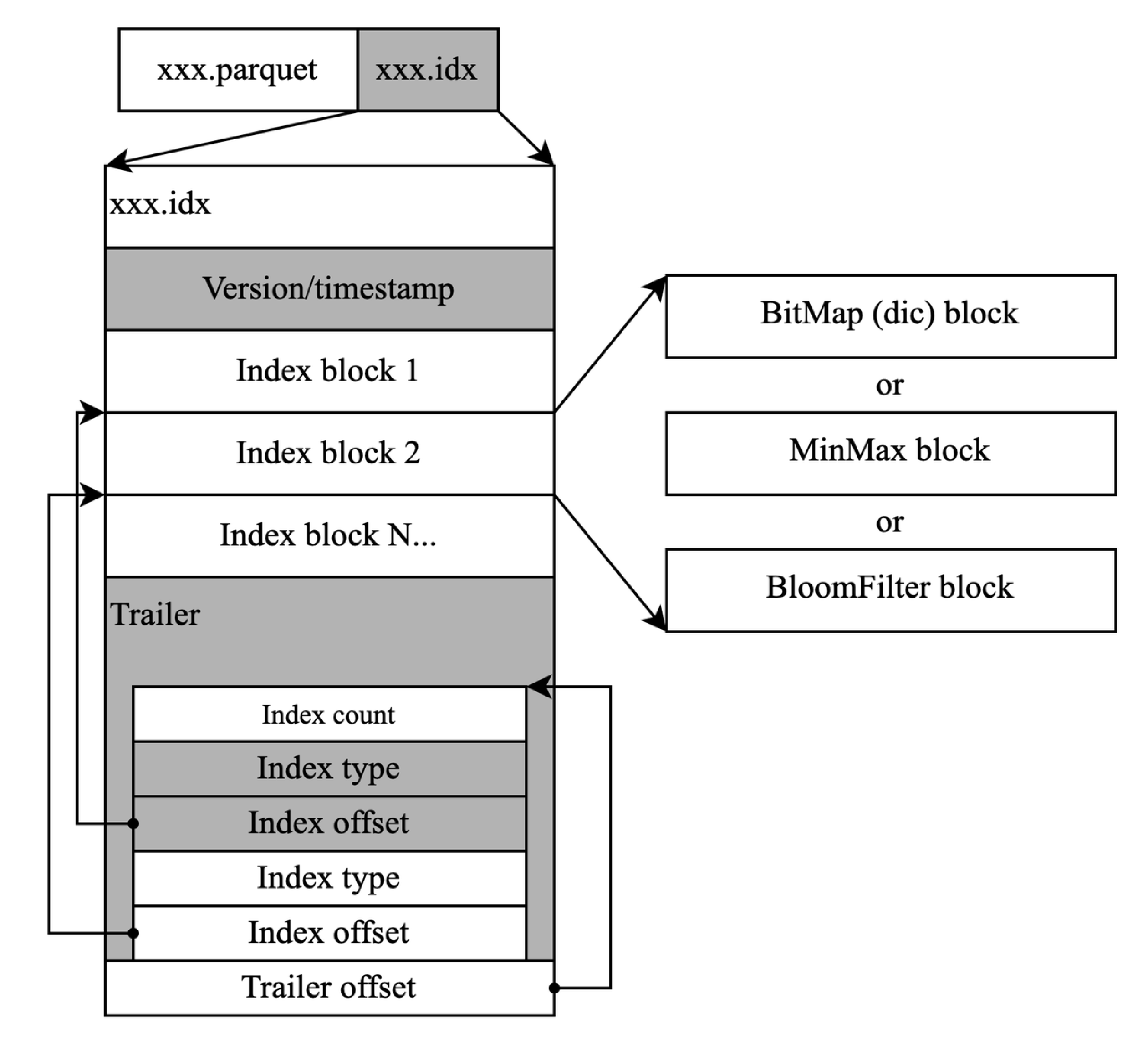

When designing GreptimeDB, the indexing should first boost scan pruning at the storage level. We use independent index files to record statistical information, like what Apache Parquet's row group metadata does. GreptimeDB's indexes permit MinMax, Dictionary, Bloomfilter, etc.

The statistical information will be helpful, if and when the query is highly selective. However, if spatial locality does not hold, results scatter around in different blocks, which lowers efficiency.

The good thing is that the analytical query pattern in time series always remains stationary. Therefore, we can use built-in metrics to record the workloads of different queries. Combining Cost-Based Optimization(CBO) with user-defined hints, we are able to build a smart index heuristically.

Adaptive Compression

Real world systems produce a huge volume of timeseries data everyday, so data compression is vital for TSDBs. If we fail to manage and compress those data, users end up costing a lot in storage and maintenance.

GreptimeDB dynamically adopts compression algorithms based on the type and cardinality of data to meet the temporal and spatial complexity constraints.

The most common data types in TSDBs are string and float. GreptimeDB dictionarizes strings when the cardinality of a block exceeds some threshold.

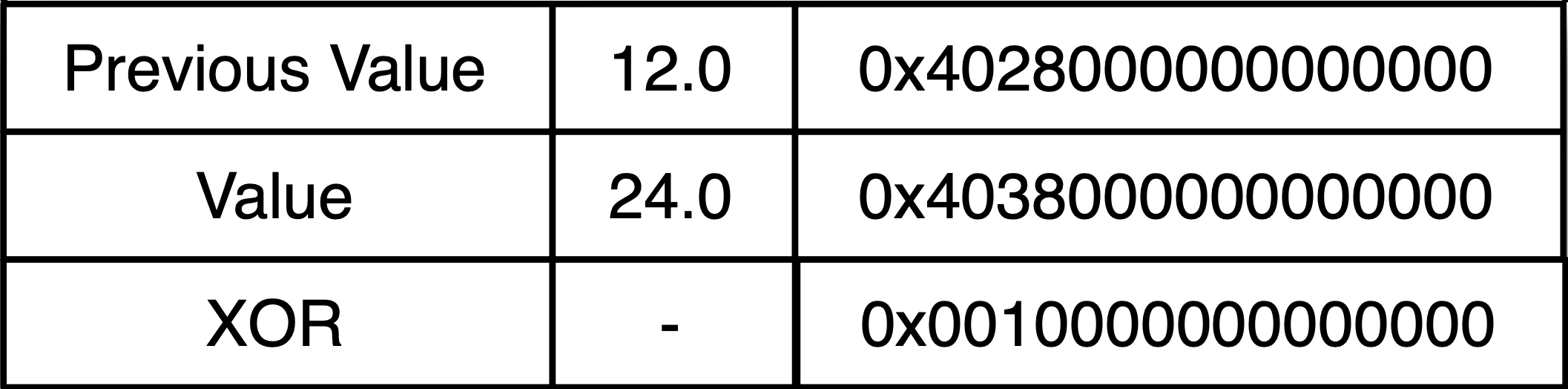

Regarding float point numbers, GreptimeDB adopts Chimp algorithm. Usually, the difference between two adjacent floats is not that significant, so lots of "0"s are produced at the beginning and end of the results after bitwise XOR operation, providing the potential to compress them.

See the example below:

By analyzing real-world time-series datasets, Chimp enhanced Gorilla's (Facebook's in-memory TSDB) algorithm, offering a higher compression rate and spatial efficiency than traditional algorithms such as zstd, Snappy, etc.

Serverless Compaction

Unlike traditional databases, GreptimeDB does not block insert requests while compressing the data, and it uses an on-demand background task like compaction to build indexes. The task is managed by a FaaS, like AWS Lambda, which can further cut the cost.

In an attempt to be more tailored to time-series scenarios, GreptimeDB plans to design the storage engine based on the LSM Tree structure, which will significantly enhance data storage and query efficiency by building smart indexes and adaptive compression.

We will continue to share our experience during our implementation of these functions. If you are interested in any of the features and designs in our planning, please join us on Slack or GitHub and let's build GreptimeDB together for the better!