Background

AWS EC2, the Elastic Compute Cloud service from Amazon Web Services, offers developers user-friendly and flexible virtual machines. As another most established service within AWS, alongside S3, EC2 has a rich history dating back to its inception in 2006. Over nearly 17 years, it has continuously evolved, underlining its significance and reliability in the cloud computing space.

Many people new to AWS EC2 might have similar feelings:

- There are too many types of AWS EC2 (hundreds)! Which one should I choose to meet my business needs without exceeding the budget?

- If the CPU and Memory configurations of EC2 are the same, does it mean their performance differences are also the same?

- What is the most cost-effective EC2 payment mode?

Reflecting back on the initial launch of EC2, there were only two types of instance available. Fast forward to today, and the landscape has dramatically expanded to an impressive 781 different types. This vast selection of EC2 options presents developers with a wide array of choices, potentially leading to a challenging decision-making process.

This article will briefly introduce some tips for selecting EC2 instances to help readers choose the right EC2 type more smoothly.

Model Classification and Selection

Meet the EC2 family

Although AWS has hundreds of EC2 types, there are only a few major categories as listed follow:

General Purpose, M and T series: provide a balance of CPU, memory, and network resources, sufficient for most scenarios;

Compute Optimized, C series: Suitable for compute-intensive services;

Memory Optimized, R and X series: Designed to provide high performance for workloads processing large data sets;

Accelerated Computing: Accelerate the compute instances and use hardware accelerators or coprocessors to execute functions such as floating-point calculations, graphics processing, or data pattern matching, which are more efficient than software running on CPUs;

Storage Optimized: Designed for workloads that require high-speed, continuous read and write access to very large data sets on local storage;

HPC Optimized, HPC series: A new category by AWS mainly suitable for applications that require high-performance processing, such as large complex simulations and deep learning workloads;

Typically, each specific EC2 type belongs to a Family with a corresponding numerical sequence. For example, for the General Purpose type M series:

- M7g / M7i / M7i-flex / M7a

- M6g / M6i / M6in / M6a

- M5 / M5n / M5zn / M5a

- M4

The numerical sequence reveals that M7 represents the latest generation, whereas M4 is comparatively older. Generally, a higher number indicates a more recent model and CPU type, and often, the pricing is more favorable due to the natural depreciation of hardware.

Key Parameters

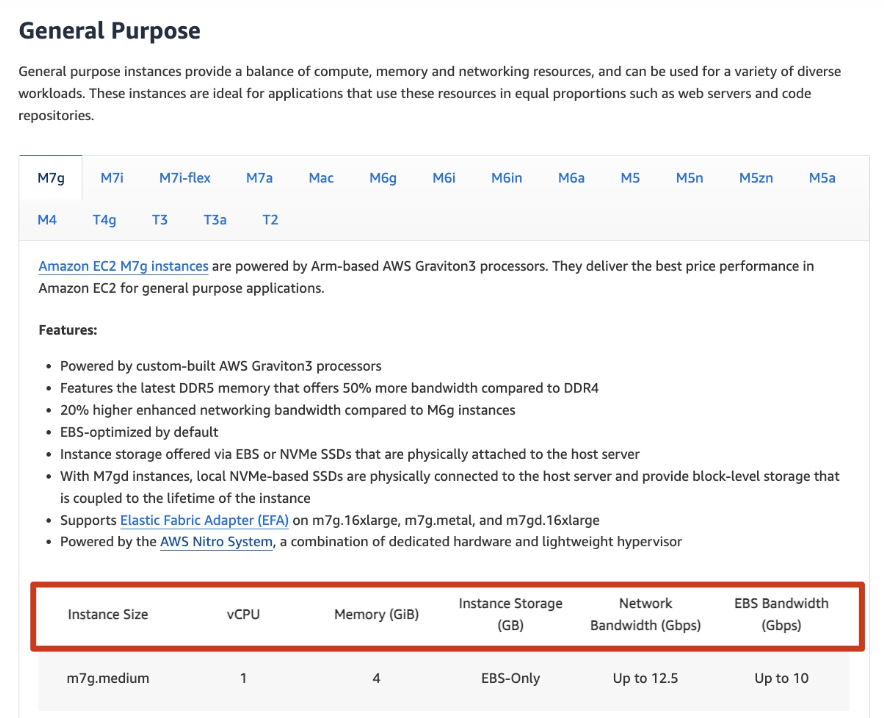

We can extract the following key parameters from the AWS EC2 model introduction.

Specific Model of EC2: Generally named as

<family>.<size>, likem7g.large/m7g.xlarge. For EC2, a certain model is unique globally;CPU and Memory Size: The number of vCPUs and the size of Memory. Most EC2 models have a 1:4 ratio, i.e., the ratio of the number of vCPUs to Memory. For example, when there is 1 vCPU, Memory is usually 4GiB; when there are 2 vCPUs, Memory is usually 8GiB.

Instance Storage: EC2 can generally mount different types of persistent storage disks, mainly:

EBS: Mounted AWS distributed block storage service, which is usually the default choice for most EC2 models. Some models only have the option to use EBS, which is bound to a specific AZ. Although its read/write latency is higher than local SSD, it's acceptable in most scenarios. EBS also has different types based on parameters like IOPS and throughput, such as:

- gp2/gp3: Underlying general-purpose SSD, with gp3 being officially recommended for better cost-performance. Typically, the default setting is 3000 IOPS, but it also offers the flexibility to increase IOPS on demand, without any downtime—though this does come with additional costs;

- io1/io2: Stronger performance and higher price, also supporting features like Multi Attach (usually, other types of EBS can only be mounted on one EC2);

Local Storage: Some models support local storage in addition to mounting EBS, but of course, they are more expensive. Generally, these models will have a

din their model name. For example,m7g.largeis an EBS-Only model, whilem7gd.largehas 1 118GiB NVME SSD local storage. Some special models also support larger local HDDs;

EBS Bandwidth: For some newer and specifically EBS-optimized EC2 models, AWS equips them with dedicated EBS bandwidth. This means that in high data throughput scenarios, EBS-optimized models can always enjoy better throughput without competing for network bandwidth on the local machine;

Network Bandwidth: The network bandwidth corresponding to the EC2 model;

CPU Model: In most scenarios, we can see CPUs from the following manufacturers:

- AWS's self-developed Graviton processor based on the ARM architecture (currently up to Graviton 3), such as the M7g series;

- Intel x86-64 architecture CPU;

- AMD x86-64 architecture CPU;

Generally, for similar configurations, the pricing trend is Intel being the most expensive, followed by AMD, and then Graviton, with the performance ranking inversely. For general scenarios that are performance-insensitive, users can consider using ARM architecture models, which offer greater cost-effectiveness.

AWS is one of the earliest cloud vendors to introduce ARM architecture into the server CPU field. After years of R&D, Graviton CPU has made significant progress and has a great competitive advantage in cost-performance. It is expected that more customers will use Graviton CPU models in the future.

Virtualization Technology: Various EC2 models employ distinct virtualization technologies, resulting in differences in their technical parameters. For example, for newer EC2 models, Nitro virtualization technology is generally applied. Nitro is AWS's latest virtualization technology, offloading many virtualization behaviors to hardware, making the software relatively lighter and virtualization performance stronger. From the user's perspective, identical configurations will yield enhanced performance due to reduced virtualization overhead.

Whether suitable for Machine Learning Scenarios: With the development of LLM technology, more and more vendors will choose to train their models in the cloud. If you want to use model training on AWS EC2, Accelerated Computing generally would be your choice, such as:

P series and G series models: They use Nvidia's GPU chips. At the re:Invent 2023 conference, Nvidia and AWS started a deeper strategic cooperation. AWS plans to use Nvidia's latest and most powerful GPUs to create a computing platform specifically for generative AI;

Trn and Inf series: In addition to using Nvidia GPUs, AWS also develops its own chips for machine learning, such as the Trainium chip for training and the Inferentia chip for model inference. Trn series and Inf series EC2 models correspond to these two AWS-developed machine learning chips respectively;

Key Takeaways

Building on the overview provided above (and there's much more to explore about EC2), we've compiled a few tips for users to consider when selecting EC2 instances.

Typically, for most EC2 models, a higher sequence number indicates a newer CPU model. This generally means better performance and, interestingly, a more cost-effective pricing structure – essentially, you get more bang for your buck.

Among the general-purpose EC2 models, the T series is relatively cheap and offers a Burstable CPU feature: the instance accumulates CPU credits while operating under baseline performance, and when encountering high load scenarios above baseline performance, it can run beyond baseline performance for a certain time according to CPU credits (without changing the cost). However, this also means the T series won't have very high performance, with generally low bandwidth and no EBS optimization. Therefore, the T series is more suitable for non-performance-verified test environments;

Within the general-purpose series, if you're aiming for cost-efficiency, it's advisable to prioritize AWS ARM architecture models;

AWS's official EC2 Pricing on its website is very difficult to read, it is recommended to use Vantage to check price information (it is also an open-source project);

For most cloud users, the cost of EC2 is generally their major expense. Here are a few ways to reduce this cost as much as possible:

Fully utilize the elasticity of the cloud: make your architecture as flexible as possible, and use on-demand computing power. You can use AWS's Karpenter or Cluster Autoscaler to make your EC2 flexible and scalable;

Use Spot instances: Spot instances can be 30% to 90% cheaper than On-Demand instances, but they are subject to preemption and can't be relied on for long-term stable operation. AWS will notify you 2 minutes before preemption, then proceed with it. Spot instances, if well-managed at the underlying level, are very suitable for elastic computing and interruption-tolerant scenarios. For example, the SkyPilot project uses different cloud Spot instances for machine learning training;

Optimize payment modes: Beyond technical approaches, cost reduction can also be achieved by purchasing Saving Plans. These plans offer lower unit costs compared to On-Demand pricing, though they come with decreased flexibility. This makes them more suited for scenarios with relatively stable business architectures.

Conclusion

Efficient selection and utilization of EC2 should be tailored to the user's unique scenarios, requiring continuous and iterative optimization. In summary, leveraging the cloud's elasticity and understanding the key parameters of various EC2 models is essential for every AWS user.