Introduction

Large Language Models (LLMs) are revolutionizing AI-driven applications with their powerful reasoning and knowledge integration capabilities. However, one fundamental limitation remains: LLMs are constrained by their training data cutoff and cannot access real-time information. This poses a critical challenge in scenarios where fresh, up-to-date data is essential.

Imagine an expert locked inside a library with no windows. The expert has access to an extensive collection of books (pre-trained knowledge), but the library’s contents remain unchanged, making it impossible to incorporate the latest developments. This is where Model Context Protocol (MCP) comes into play. MCP provides a standardized mechanism for LLMs to query and analyze external data sources dynamically, bridging the "Data Recency Gap".

For industries relying on real-time insights—such as system observability, IoT analytics, and financial markets—the demand for LLMs to access fresh data is even more pressing. GreptimeDB MCP Server enables seamless integration between LLMs and observability databases, empowering AI applications with real-time data analysis capabilities. In this article, we will delve into the working principles of MCP, the architecture of GreptimeDB MCP Server, and explore practical examples demonstrating its potential in AI-driven applications.

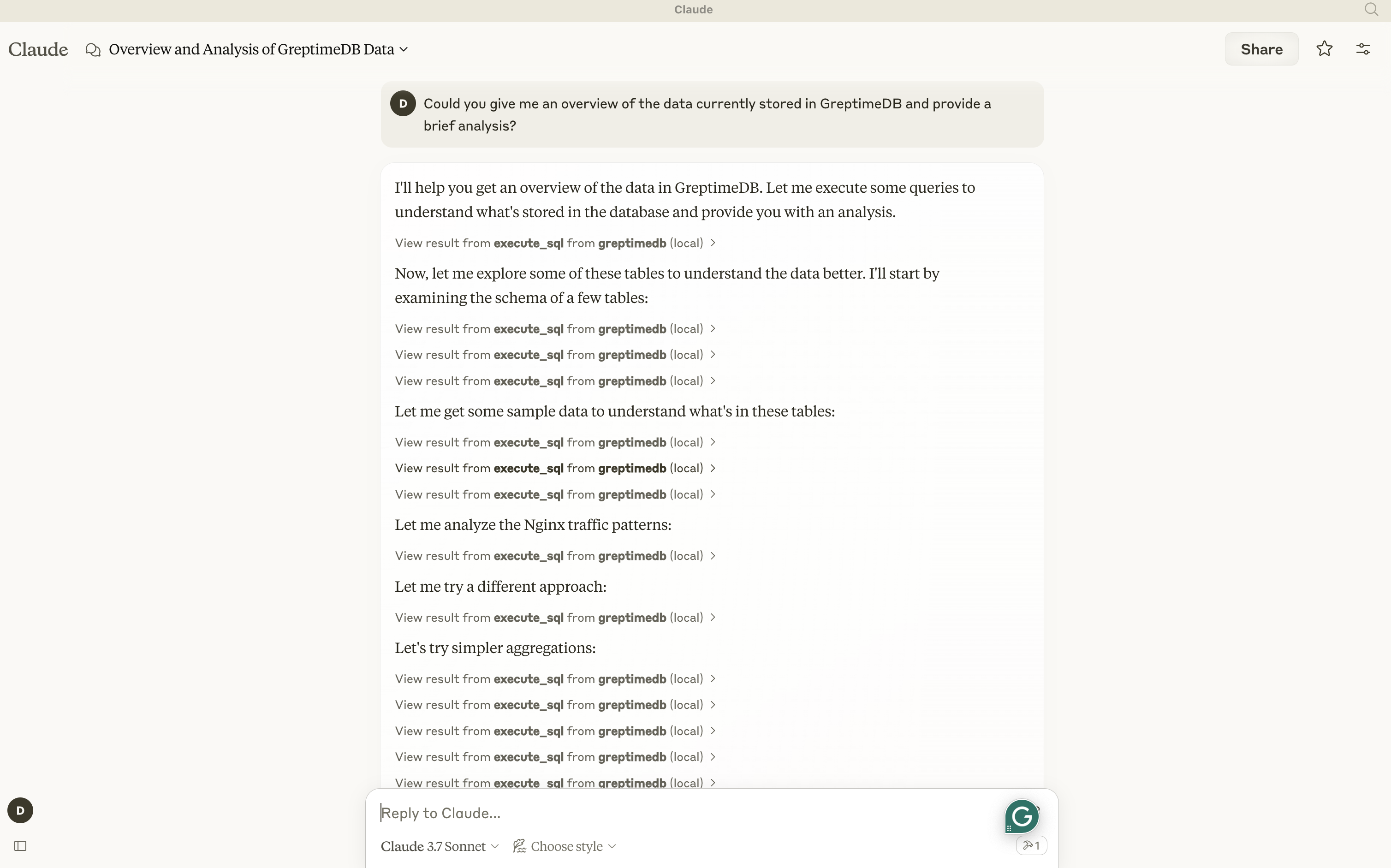

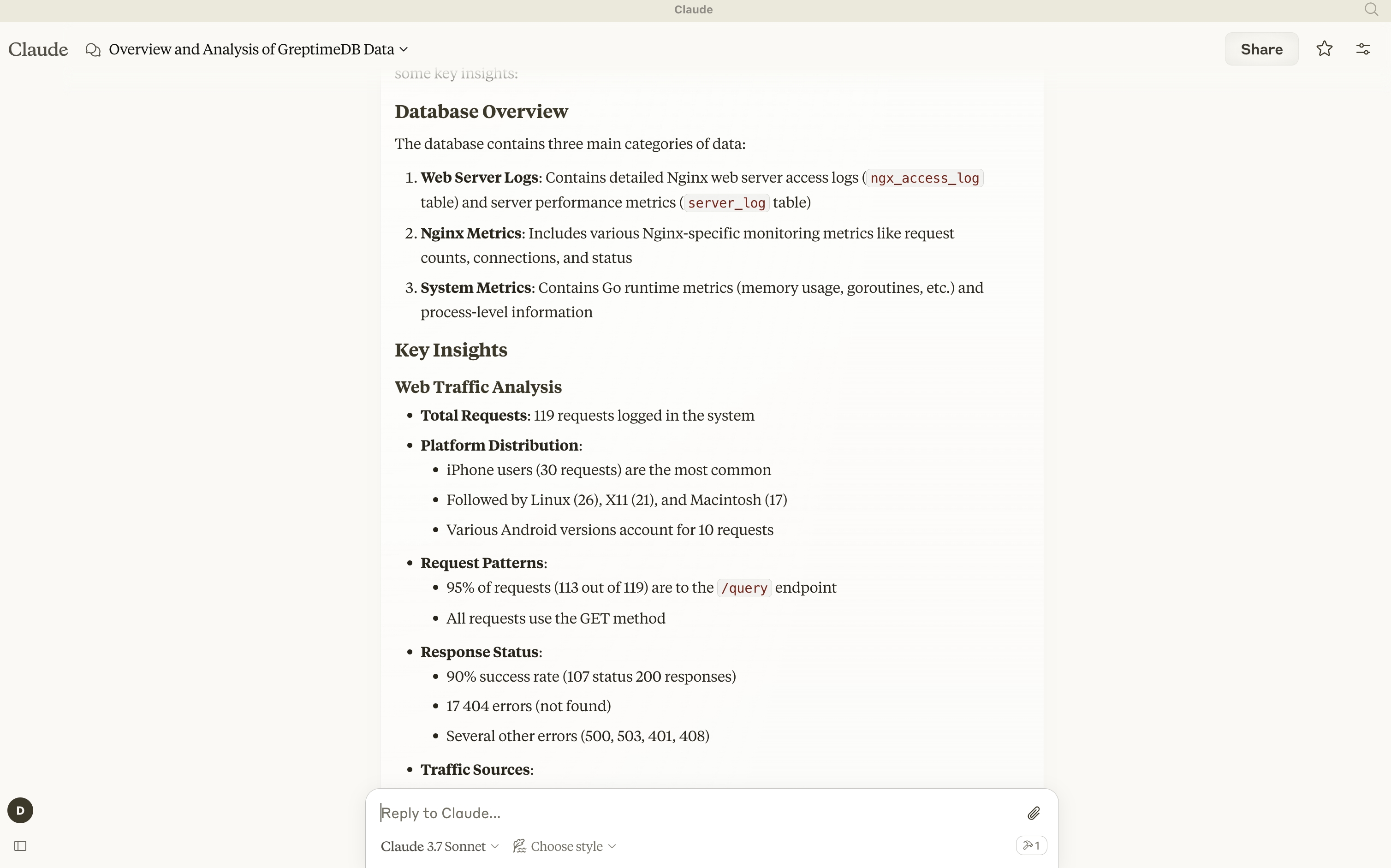

Check out the demo video for an intuitive understanding of how LLMs, MCP, and GreptimeDB work together!

Understanding Model Context Protocol (MCP)

What is MCP?

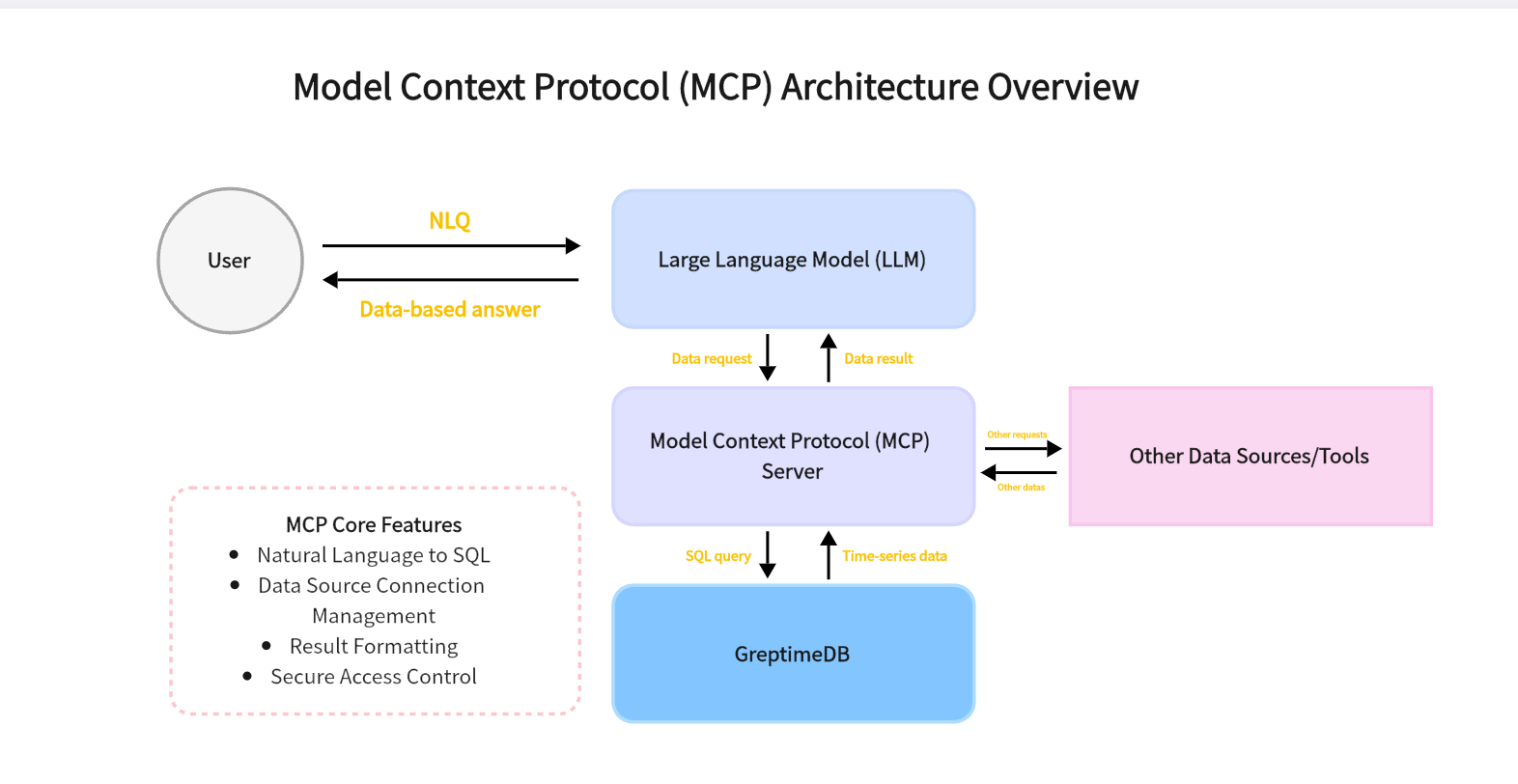

Model Context Protocol (MCP) is an interface standard designed to bridge LLMs with external data sources. Traditional LLMs lack direct access to real-time or domain-specific data, limiting their effectiveness in dynamic scenarios. MCP addresses this by enabling LLMs to interact with external data sources and tools in a structured manner.

How MCP Works

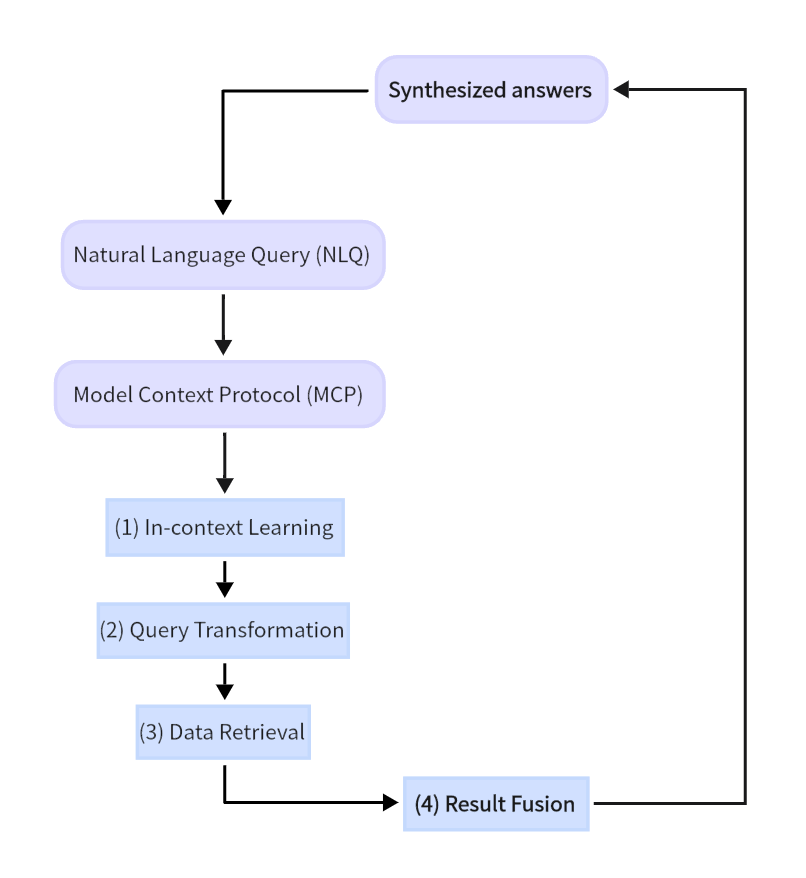

MCP follows a structured process to enable LLMs to retrieve and analyze external data:

- Context Identification – MCP determines whether a user query requires external data retrieval.

- Query Transformation – Natural language queries are converted into structured queries (e.g., SQL).

- Data Retrieval – The transformed query is executed against external data sources.

- Result Fusion – Retrieved data is integrated with the LLM’s pre-existing knowledge to generate a response.

- Feedback Optimization – Continuous refinements improve query accuracy and retrieval efficiency.

By implementing MCP, LLMs can surpass their training limitations and leverage real-time data for enhanced decision-making.

Introducing GreptimeDB MCP Server

Know More About GreptimeDB

GreptimeDB is an open-source, cloud-native observability database tailored for observability, IoT, DevOps, and monitoring scenarios. It offers high-performance data ingestion, distributed scalability, and flexible data modeling capabilities.

Overview of GreptimeDB MCP Server

GreptimeDB MCP Server is an open-source implementation of Model Context Protocol, developed by the GreptimeDB team. It enables LLMs to seamlessly access and analyze observability data. The project is available on GitHub.

Key Objectives

- Provide LLMs with a standardized interface for accessing observability data.

- Optimize natural language query transformation for efficient observability data retrieval.

- Ensure high-performance, low-latency querying of real-time data.

- Support complex observability and monitoring scenarios.

Use Cases

GreptimeDB MCP Server unlocks new possibilities in multiple domains:

- Intelligent System Monitoring – AI-driven system health analysis and anomaly detection.

- IoT Data Analysis – Transforming IoT sensor data into actionable insights.

- Financial Market Analysis – Real-time financial trend detection for decision-making.

- Health Monitoring – AI-powered patient health assessments based on sensor data.

- AI-Based Customer Support – Chatbots accessing historical user interactions for personalized responses.

- ...

We use GreptimeDB as an example to demonstrate the main interactions of the MCP protocol:

The natural language input from the user is recognized by the LLM. If there is a specific need for time-sensitive data queries (such as querying data from GreptimeDB), the request is converted into a data query via the MCP protocol. The LLM then summarizes and analyzes the results accordingly. This process may be repeated multiple times and can also involve other data sources and tools.

Getting Started with GreptimeDB MCP Server

Prerequisites

To set up GreptimeDB MCP Server, ensure the following:

- Install Docker and Docker Compose.

- Start the nginx access log analysis demo:

git clone https://github.com/GreptimeTeam/demo-scene.git

cd demo-scene/nginx-log-metrics

docker-compose upVerify the setup via http://localhost:4000/dashboard/.

Setting Up MCP Server

- Install

uv, a Python package manager, and installgreptimedb-mcp-server:

pip install uv

git clone https://github.com/GreptimeTeam/greptimedb-mcp-server.git

cd greptimedb-mcp-server

pip install -r requirements.txt

uv pip install -e .- Configure Claude Desktop, an MCP client, by modifying:

- Mac:

~/Library/Application Support/Claude/claude_desktop_config.json - Windows:

%APPDATA%/Claude/claude_desktop_config.json

{

"mcpServers": {

"greptimedb": {

"command": "uv",

"args": ["--directory", "/path/to/greptimedb-mcp-server", "run", "-m", "greptimedb_mcp_server.server"],

"env": {

"GREPTIMEDB_HOST": "localhost",

"GREPTIMEDB_PORT": "4002",

"GREPTIMEDB_USER": "root",

"GREPTIMEDB_PASSWORD": "",

"GREPTIMEDB_DATABASE": "public"

}

}

}

}Replace /path/to/greptimedb-mcp-server with the actual path.

Start Claude Desktop, and begin exploring MCP-powered data analysis!

Conclusion & Future Outlook

GreptimeDB MCP Server empowers LLMs with real-time access to observability data, addressing the limitations of static training data. By implementing Model Context Protocol, AI applications can perform dynamic data analysis across observability, IoT, and financial domains.

Moving forward, GreptimeDB MCP Server will evolve with:

- Enhanced built-in documentation and knowledge base.

- Advanced observability forecasting and anomaly detection.

- Expanded integration with LLM platforms.

- Optimized large-scale data processing performance.

- Improved security and compliance features.

- ...

As an open-source project, we welcome contributions from the community to further advance AI-powered real-time analytics. Resources:

About Greptime

Greptime offers industry-leading time series database products and solutions to empower IoT and Observability scenarios, enabling enterprises to uncover valuable insights from their data with less time, complexity, and cost.

GreptimeDB is an open-source, high-performance time-series database offering unified storage and analysis for metrics, logs, and events. Try it out instantly with GreptimeCloud, a fully-managed DBaaS solution—no deployment needed!

The Edge-Cloud Integrated Solution combines multimodal edge databases with cloud-based GreptimeDB to optimize IoT edge scenarios, cutting costs while boosting data performance.

Star us on GitHub or join GreptimeDB Community on Slack to get connected.