Summary

Together with all our contributors worldwide, we are glad to see GreptimeDB making remarkable progress for the better. Below are some highlights:

- Support

DELETEin distributed mode - Support query external data

- Refactor remote catalog manager

- Support import/export of datasets in the format of CSV and JSON

Contributor list: (in alphabetical order)

For the past two weeks, our community has been super active with a total of 7 PRs from 3 contributors merged successfully and lots pending to be merged. Congrats on becoming our most active contributors in the past 2 weeks:

👏 Let's welcome @DevilExileSu and @NiwakaDev as the new contributors to join our community with their 3 PRs merged respectively.

A big THANK YOU for the generous and brilliant contributions! It is people like you who are making GreptimeDB a great product. Let's build an even greater community together.

Highlights of Recent PR

Support DELETE in distributed mode

We have been committed to supporting the DELETE SQL statement, and initially provided a minimal functional version. Currently, we not only support DELETE in standalone mode, but also in distributed mode, both in gRPC and SQL.

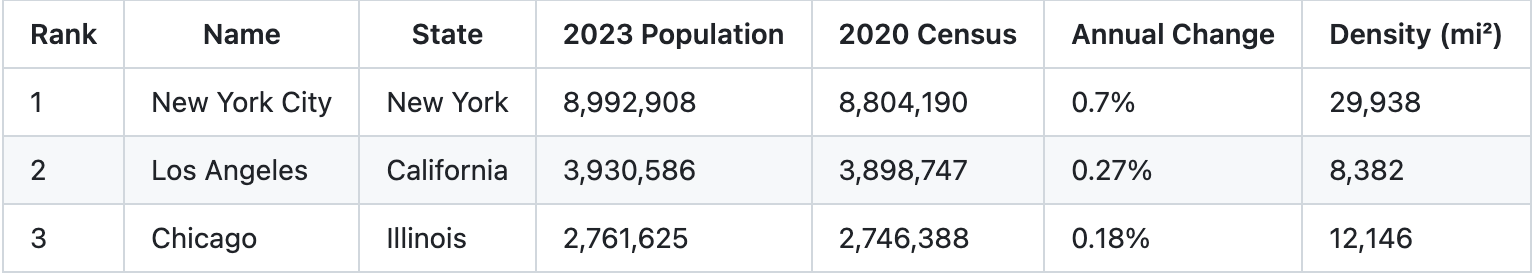

Support query external data

When processing time series data, it is common to combine it with additional information. We now support seamlessly creation of external tables in various formats, including JSON, CSV, and Parquet. The ability to generate external tables using external data sources would be very beneficial. For example, if we have a CSV file on the local file system /var/data/city.csv:

Rank , Name , State , 2023 Population , 2020 Census , Annual Change , Density (mi²)

1 , New York City , New York , 8,992,908 , 8,804,190 , 0.7% , 29,938

2 , Los Angeles , California , 3,930,586 , 3,898,747 , 0.27% , 8,382

3 , Chicago , Illinois , 2,761,625 , 2,746,388 , 0.18% , 12,146

......Then we can create a table city with it:

MySQL> CREATE EXTERNAL TABLE city with(location="/var/data/city.csv",format="csv");And query it by SQL:

MySQL> select * from city;

Even join it with time series data:

select temperatures. value, city.population from temperatures

left join city on city.name=temperatures.cityRefactor remote catalog manager

Arrow-datafusion provides a set of traits(CatalogList / CatalogProvider / SchemaProvider) for query engines to retrieve table entities by the triplet: catalog name/schema name/table name. But all these traits are synchronous, which means we can not rely on any async operation to retrieve the table entities from remote catalog implementation, for example, metasrv in distributed mode.

In this PR, instead of fetching table entities from underlying storage during planning, we resolve tables from statements in advance and put these tables in a memory based catalog list. As a result, we're able to avoid overhead brought by bridging sync traits with async implementation.

Support import/export of datasets from CSV and JSON format file

We now support the import and export of datasets from CSV and JSON format files. Here are some main changes:

- Add

ParquetRecordBatchStreamAdapter(ParquetRecordBatchStream -> DataFusion RecordBatchStream) - Refactor the

copy fromexecutor - Support copy from CSV and JSON format files

- Support copy the table to the CSV and JSON format file

- Add Tests

These are the updates of GreptimeDB and we are constantly making progress. We believe that the strength of our software shines in the strengths of each individual community member. Thanks for all your contributions.