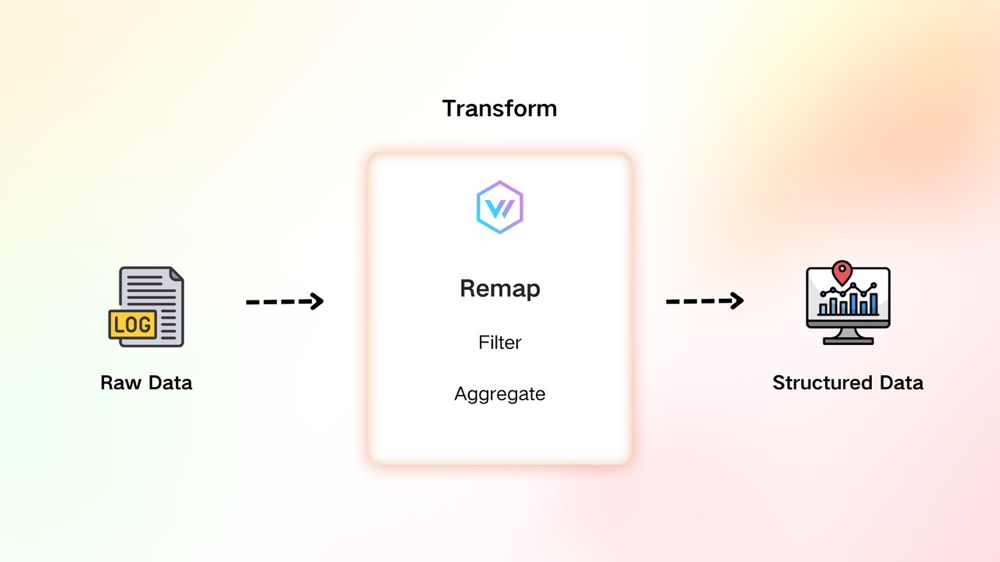

In modern observability workflows, raw data collected by agents often requires trimming or restructuring to efficiently extract critical insights. By routing log data to various storage systems based on its content, this process not only enhances data structure and query ability but also minimizes the storage resources consumed by irrelevant information.

To meet this need, Vector offers a flexible Transforms feature equipped with the versatile Vector Remap Language (VRL). VRL empowers users to define custom data processing logic, providing flexibility to handle diverse data processing scenarios.

VRL is an expression-oriented language designed specifically to transform observability data (logs and metrics) in a safe and efficient manner. With simple syntax and a rich set of built-in functions, VRL is tailored for observability use cases.

In the previous blog, "Vector in Action - An Open-Source Tool for Logs and Metrics Collection," we introduced the basic concepts of Vector and demonstrated how to use simple transforms to process NGINX logs. Vector's Transform feature includes methods such as aggregate, dedupe, filter, and remap.

In this blog, let's discuss everything about remap.

TL;DR

Remap allows you to customize transform logic through VRL. Let’s start with a simple example:

[sources.demo_source]

type = "demo_logs"

format = "apache_common"

lines = [ "line1" ]

[transforms.transform_apache_common_log]

type = "remap"

inputs = [ "demo_source" ]

drop_on_error = true

drop_on_abort = true

reroute_dropped = true

source = """

log = parse_apache_log!(.message,format: "common")

if to_int(log.status) > 300 {

abort

}

. = log

.mark = "transform_apache_common_log"

"""

[sinks.transform_apache_common_log_sink]

type = "console"

inputs = [ "transform_apache_common_log" ]

encoding.codec = "json"

[sinks.demo_source_sink]

type = "console"

inputs = [ "demo_source" ]

encoding.codec = "json"

[transforms.transform_apache_common_log_dropped]

type = "remap"

inputs = [ "transform_apache_common_log.dropped" ]

source = """

.mark = "dropped"

"""

[sinks.dropped_msg_sink]

type = "console"

inputs = [ "transform_apache_common_log_dropped" ]

encoding.codec = "json"Using this configuration file to start Vector, you will see three types of JSON outputs. We will use these JSON examples to explain the logic behind the VRL code.

Application Logic of VRL in Transform

The example above provides a clear overview of transforms. In lines 16 to 19 of the configuration, we define a transform named transform_apache_common_log. Below, we'll dive into the specifics of this transform, with a particular focus on the VRL logic within the source field.

The VRL in the source field can be divided into three key parts:

1. Parsing

log = parse_apache_log!(.message, format: "common")Like most assignment statements in programming languages, this line assigns the result of the expression on the right-hand side to the log variable. The expression parses the message field from the original event and converts it into a structured key-value format based on the Apache Common log format.

Breaking it down further: it calls the built-in function parse_apache_log, which accepts two parameters:

.messageformat: "common"

The .message here accesses the message field of the current event. The format of the current event is as follows:

{

"host": "localhost",

"message": "218.169.11.238 - KarimMove [25/Nov/2024:04:21:15 +0000] \"GET /wp-admin HTTP/1.0\" 410 36844",

"service": "vector",

"source_type": "demo_logs",

"timestamp": "2024-11-25T04:21:15.135802380Z"

}In addition to the message field, the event includes metadata such as host and source_type. Apache log formats offer variations like common and combined, and the second parameter here specifies using the common format to parse the incoming log content.

You may also notice the exclamation mark (!) after parse_apache_log. Unlike its use in Rust macros, this exclamation mark represents a specific error-handling mechanism, which we’ll discuss in detail in the VRL error-handling section.

If the parsing succeeds, the log variable is assigned a structured representation of the Apache common format. This transforms the original plain string into a set of key-value pairs, enabling easier filtering, aggregation, and processing in subsequent operations.

{

"host": "67.210.39.57",

"message": "DELETE /user/booperbot124 HTTP/1.1",

"method": "DELETE",

"path": "/user/booperbot124",

"protocol": "HTTP/1.1",

"size": 14865,

"status": 300,

"timestamp": "2024-11-26T06:24:17Z",

"user": "meln1ks"

}2. Filtering and Error Handling

Once the log is structured, further filtering can be applied.

If we are not interested in data where the status is greater than 300, we can configure the transform to abort when log.status > 300. You can always customize your logic based on the actual requirement.

if to_int(log.status) > 300 {

abort

}By default, if abort is triggered, the current event will be passed along to the next processing unit. Since we are not interested in this data, we decide to discard it.

To implement this, use the following configuration:

drop_on_error = true

drop_on_abort = trueHowever, if unforeseen issues cause errors during VRL execution (such as type or parsing errors), the data we care about may be lost, leading to gaps in the information. To mitigate this, we can use the reroute_dropped = true configuration. This will add metadata to the dropped events and route them to a dedicated <current_transform_id>.dropped input, such astransform_apache_common_log.dropped in the case above.

{

"host": "localhost",

"mark": "dropped",

"message": "8.132.254.222 - benefritz [25/Nov/2024:07:56:35 +0000] \"POST /controller/setup HTTP/1.1\" 550 36311",

"metadata": {

"dropped": {

"component_id": "transform_apache_common_log",

"component_kind": "transform",

"component_type": "remap",

"message": "aborted",

"reason": "abort"

}

},

"service": "vector",

"source_type": "demo_logs",

"timestamp": "2024-11-25T07:56:35.126819716Z"

}By monitoring this input, we can track which data has been dropped. Depending on the situation, we can adjust the VRL to make use of the dropped data as needed.

3. Replacement

. = log

.mark = "transform_apache_common_log"In the configuration above, the dot (.) on the left side of the first line refers to the current event being processed in VRL. The expression on the right, which is the previously parsed structured log, will replace the current event.

The second line adds a mark field to the event, indicating that the event originated from the transform_apache_common_log transform.

As a result, the event is now structured in the following format, which will replace the original format and be passed downstream.

{

"host": "67.210.39.57",

"mark": "transform_apache_common_log",

"message": "DELETE /user/booperbot124 HTTP/1.1",

"method": "DELETE",

"path": "/user/booperbot124",

"protocol": "HTTP/1.1",

"size": 14865,

"status": 300,

"timestamp": "2024-11-26T06:24:17Z",

"user": "meln1ks"

}VRL Error Handling

In the above section, when calling parse_apache_log, we added a ! at the end. This is an error handling mechanism in VRL. Since the fields in an event can have various types, many functions might return errors when the logic or data type does not meet expectations. To handle such cases, an error handling mechanism is necessary.

The parse_apache_log function actually returns two results: one is the successfully returned value, and the other is an error message, such as:

result, err = parse_apache_log(log,format:"common")When err is not null, it indicates that an error occurred during the function execution. In VRL, adding a ! after the function name is a syntactic shortcut, meaning that if an error is encountered, the current VRL fragment is immediately interrupted and the error is returned early.

The code would look something like this:

result, err = parse_apache_log(log,format:"common")

if err != null {

panic

}If your message contains a mix of different data formats, using ! to prematurely interrupt the parsing logic may not be the most appropriate approach. In cases where the event field could be either JSON or in Apache Common format, you might need a configuration like the following to ensure structured log output:

structured, err = parse_json(.message)

if err != null {

log("Unable to parse JSON: " + err, level: "error")

. = parse_apache_log!(.message,format: "common")

} else {

. = structured

}We cannot simply terminate the transform when parse_json fails; instead, we should attempt to parse it using the Apache Common format and make decisions based on the result.

Summary

Vector's Transform functionality incorporates the powerful VRL (Vector Remap Language), which supports custom data processing logic. With Remap, we can perform operations such as parsing, filtering, and replacing on raw data. Additionally, VRL provides a robust error handling mechanism that ensures the stability of the transform process, preventing task failures or data loss due to unexpected data anomalies. VRL also comes with an extensive function library, making it easy to handle most common data formats. This not only improves development efficiency but also enhances the flexibility and effectiveness of data processing.

For more details, refer to the official VRL documentation.

About Greptime

Greptime offers industry-leading time series database products and solutions to empower IoT and Observability scenarios, enabling enterprises to uncover valuable insights from their data with less time, complexity, and cost.

GreptimeDB is an open-source, high-performance time-series database offering unified storage and analysis for metrics, logs, and events. Try it out instantly with GreptimeCloud, a fully-managed DBaaS solution—no deployment needed!

The Edge-Cloud Integrated Solution combines multimodal edge databases with cloud-based GreptimeDB to optimize IoT edge scenarios, cutting costs while boosting data performance.

Star us on GitHub or join GreptimeDB Community on Slack to get connected.